The goal of this section is to understand how the chance of at least one collision behaves as a function of the number of individuals , when there are hash values and is large compared to .

We know that chance is

While this gives an exact formula for the chance, it doesn’t give us a sense of how the function grows. Let’s see if we can develop an approximation that has a simpler form and is therefore easier to study.

The main steps in the approximation will be used repeatedly in this course, so we will set them out in some detail here.

🎥 See More

1.5.1Step 1: Only Approximate Terms that Need Approximation¶

While this might seem obvious, it’s worth noting as it can save a lot of unnecessary fiddling. We are trying to approximate

so all we need to approximate is

We can subtract the approximation from 1 at the end.

In other words, we’ll approximate instead.

1.5.2Step 2: Use to Convert Products to Sums¶

Our formula is a product, but we are much better at working with sums. The log function helps us turn the product into a sum:

Once we have an approximation to , we can use exponentiation to convert it to an approximation for what we want, which is .

1.5.3Step 3: Use Properties of ¶

This is usually the step where the main calculation happens. Remember that for small , where the symbol here means that the ratio of the two sides goes to 1 as goes to 0. The approximation might not be great for larger but let’s try it out anyway.

by the formula for the sum of the first positive integers.

1.5.4Step 4: Invert as Needed to Complete the Approximation¶

The hard work has been done, and now we just have to clean things up. Step 3 gave us

and so by exponentiation on both sides we get

Finally,

Now you can see why the rises sharply as a function of the number of people. Remember that is fixed and varies between 1 and . As increases, increases fast, essentially like . So decreases fast, which makes drop sharply; and that makes shoot up.

It’s worth noting that the main approxmation is in the middle of Step 3, where we use for small . We will encounter this approximation several times in the course.

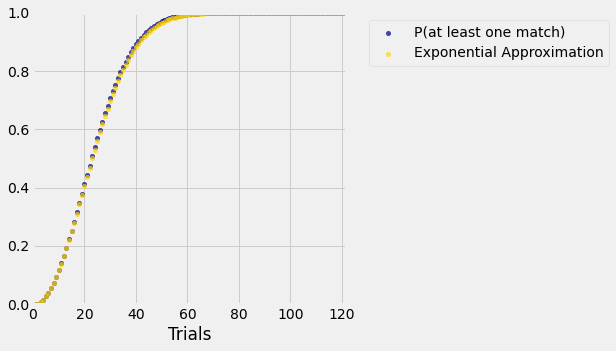

1.5.5How Good is the Approximation?¶

To see how the exponential approximation compares with the exact probabilities, let’s work in the context of birthdays. You can change in the code if you prefer a different setting.

To review the entire sequence of steps, we will redo our exact calculations and augment them with a column of approximations. We’ll use the slightly more careful approximation of the two above.

# All of this code is from the previous section.

N = 365

def p_no_match(n):

individuals_array = np.arange(n)

return np.prod((N - individuals_array)/N)

trials = np.arange(1, N+1, 1)

results = Table().with_columns('Trials', trials)

different = results.apply(p_no_match, 'Trials')

# Only the Exponential Approximation column is new

results = results.with_columns(

'P(at least one match)', 1 - different,

'Exponential Approximation', 1 - np.e**( -(trials - 1)*trials/(2*N) )

)

resultsThe first 10 approximations look pretty good. Let’s take a look at some more.

results.scatter('Trials')

plt.xlim(0, N/3)

plt.ylim(0, 1);

On the scale of this graph, the blue dots (the exact values) are almost indistinguishable from the gold (our exponential approximation). You can run the code again with the less careful approximation that replaces by and see that the approximation is still excellent.

What we learn from the second form of the approximation is that the chance that there is at least one collision among the assigned values is essentially where is a positive constant.

We will encounter the function again when we study the Rayleigh distribution later in the course.