This is a powerful method for finding expected counts. It is based on the observation that among trials, the number of “good” results can be counted by first coding each “good” result as 1 and each of the other results as 0, and then adding the 1’s and 0’s.

If is the total number of good results among trials, then

where for each in the range 1 through , the random variable is the indicator of “the result of the th trial is good”.

Now recall that if is the indicator of an event , then . That is, the expectation of an indicator is the probability of the event that it indicates.

So

It is important to note that the additivity works regardless of whether the trials are dependent or independent.

🎥 See More

8.5.1Expectation of the Binomial¶

Let have the binomial distribution. Then can be thought of as the number of successes in i.i.d. Bernoulli trials, and we can write

where for each in the range 1 through , is the indicator of “Trial is a success”. Thus

Examples of use:

The expected number of heads in 100 tosses of a coin is .

The expected number of heads in 25 tosses is 12.5. Remember that the expectation of an integer-valued random variable need not be an integer.

The expected number of times green pockets win in 20 independent spins of a roulette wheel is , roughly.

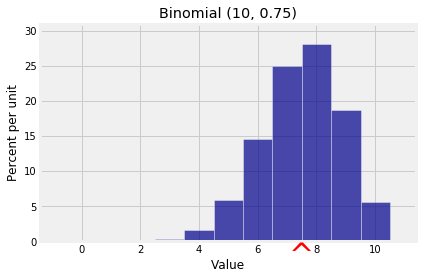

k = np.arange(11)

probs = stats.binom.pmf(k, 10, 0.75)

bin_10_75 = Table().values(k).probabilities(probs)

Plot(bin_10_75, show_ev=True)

plt.title('Binomial (10, 0.75)');

Notice that we didn’t use independence. Additivity of expectation works whether or not the random variables being added are independent. This will be very helpful in the next example.

🎥 See More

8.5.2Expectation of the Hypergeometric¶

Let have the hypergeometric distribution. Then can be thought of as the number of good elements in draws made at random without replacement from a population of elements of which are good and bad. Then

where for each in the range 1 through , is the indicator of “Draw results in a good element”. Thus

This is the same answer as for the binomial, with the population proportion of good elements replacing .

Examples of use:

The expected number of red cards in a bridge hand of 13 cards is .

The expected number of Independent voters in a simple random sample of 200 people drawn from a population in which 10% of the voters are Independent is .

These answers are intuitively clear, and we now have a theoretical justification for them.

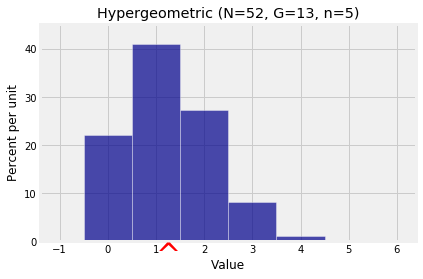

# Number of hearts in a poker hand

N = 52

G = 13

n = 5

k = np.arange(6)

probs = stats.hypergeom.pmf(k, N, G, n)

hyp_dist = Table().values(k).probabilities(probs)

Plot(hyp_dist, show_ev=True)

plt.title('Hypergeometric (N=52, G=13, n=5)');

Answer

(a) 4 by the binomial expectation formula

(b) 4 by the hypergeometric expectation formula

8.5.3Number of Missing Classes¶

A population consists of four classes of individuals, in the proportions 0.4, 0.3, 0.2, and 0.1. A random sample of individuals is chosen so that the choices are mutually independent. What is the expected number of classes that are missing in the sample?

If is the number of missing classes, then

where for each , is the indicator of “Class is missing in the sample”.

For Class to be missing in the sample, all selected individuals have to be from the other classes. Thus

The four indicators aren’t independent but that doesn’t affect the additivity of expectation.

🎥 See More

Answer

(ii)