If the form of a distribution is intractable in that it is difficult to find exact probabilities by integration, then good estimates and bounds become important. Bounds on the tails of the distribution of a random variable help us quantify roughly how close to the mean the random variable is likely to be.

We already know two such bounds. Let be a random variable with expectation and SD .

19.4.1Markov’s Bound on the Right Hand Tail¶

If is non-negative,

This bound depends only on the first moment of (and the fact that is non-negative).

19.4.2Chebychev’s Bound on Two Tails¶

This bound depends on the first and second moments of since .

In cases where both bounds apply, Chebyshev often does better than Markov because it uses two moments instead of one. So it is reasonable to think that the more moments you know, the closer you can get to the tail probabilities.

Moment generating functions can help get good bounds on tail probabilities. In what follows, we will assume that the moment generating function of is finite over the whole real line. If it is finite only over a smaller interval around 0, the calculations of the mgf below should be confined to that interval.

🎥 See More

19.4.3Exponential Bounds on Tails¶

Let be a random variable with mgf . We are going to find an upper bound for the right hand tail probability for a fixed .

To see how the moment generating function comes in, fix . The function defined by is increasing as well as non-negative. Because it is increasing,

Since is a non-negative random variable, we can apply Markov’s inequality as follows.

Since is fixed, is constant. So we have shown that is falling exponentially as a function of .

19.4.4Chernoff Bound on the Right Tail¶

The calculation above is the first step in developing a Chernoff bound on the right hand tail probability for a fixed .

For the next step, notice that you can choose to be any positive number. For our fixed , some choices of will give sharper upper bounds than others. The sharpest among all of the bounds will correspond to the value of that minimizes the right hand side. So the Chernoff bound has an optimized form:

19.4.5Application to the Normal Distribution¶

Suppose has the normal distribution and we want to get a sense of how far can be above the mean. Fix . The exact chance that the value of is at least above the mean is

because the distribution of is normal . This exact answer looks neat and tidy, but the standard normal cdf is not easy to work with analytically. Sometimes we can gain more insight from a good bound.

The optimized Chernoff bound is

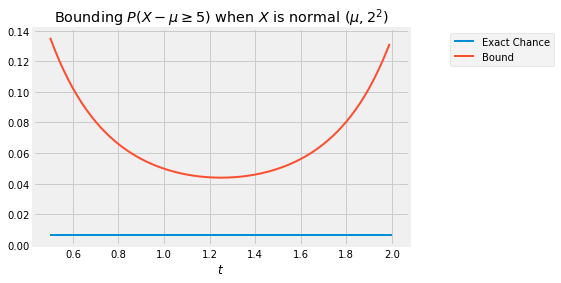

The curve below is the graph of as a function of , in the case and . The flat line is the exact probability of . The curve is always above the flat line: no matter what is, the bound is an upper bound. The sharpest of all the upper bounds corresponds to the minimizing value which is somewhere in the 1.2 to 1.3 range.

To find the minimizing value of analytically, we will use the standard calculus method of minimization. But first we will simplify our calculations by observing that finding the point at which a positive function is minimized is the same as finding the point at which the log of the function is minimized. This is because is an increasing function.

So the problem reduces to finding the value of that minimizes the function . By differentiation, the minimizing value of solves

and hence

So the Chernoff bound is

Compare this with the bounds we already have. Markov’s bound can’t be applied directly as can have negative values. Because the distribution of is symmetric about 0, Chebychev’s bound becomes

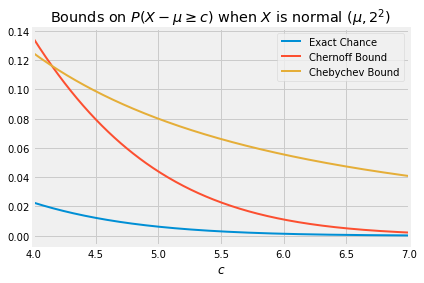

When is large, the optimized Chernoff bound is quite a bit sharper than Chebychev’s. In the case , the graph below shows the exact value of as a function of , along with the Chernoff and Chebychev bounds.

19.4.6Chernoff Bound on the Left Tail¶

By an analogous argument we can derive a Chernoff bound on the left tail of a distribution. For a fixed , the function is decreasing and non-negative. So for and any fixed ,

and therefore

19.4.7Sums of Independent Random Variables¶

The Chernoff bound is often applied to sums of independent random variables. Let be independent and let . Fix any number . For every ,

This result is useful for finding bounds on binomial tails because the moment generating function of a Bernoulli random variable has a straightforward form. It is also used for bounding tails of sums of independent indicators with possibly different success probabilities. We will leave all this for a subsequent course.