One way to think about the SD is in terms of errors in prediction. Suppose I am going to generate a value of the random variable , and I ask you to predict the value I am going to get. What should you use as your predictor?

A natural choice is , the expectation of . But you could choose any number . The error that you will make is . About how big is that? For most reasonable choices of , the error will sometimes be positive and sometimes negative. To find the rough size of this error, we will avoid cancellation as before, and start by calculating the mean squared error of the predictor :

Notice that by definition, the variance of is the mean squared error of using as the predictor.

🎥 See More

We will now show that is the least squares constant predictor, that is, it has the smallest mean squared error among all constant predictors. Since we have guessed that is the best choice, we will organize the algebra around that value.

with equality if and only if .

12.2.1The Mean as a Least Squares Predictor¶

What we have shown is the predictor has the smallest mean squared error among all choices . That smallest mean squared error is the variance of , and hence the smallest root mean squared error is the SD .

This is why a common approach to prediction is, “My guess is the mean, and I’ll be off by about an SD.”

Answer

(a) 16 dollars

(b) 9 squared dollars

(c) 3 dollars

12.2.2German Tanks, Revisited¶

Recall the German tanks problem in which we have a sample drawn at random without replacement from for some fixed , and we are trying to estimate .

We came up with two unbiased estimators of :

An estimator based on the sample mean: where is the sample average

An estimator based on the sample maximum: where .

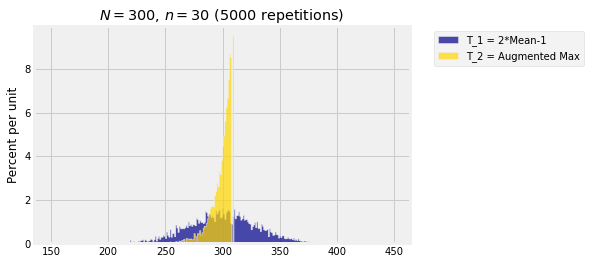

Here are simulated distributions of and in the case and , based on 5000 repetitions.

def simulate_T1_T2(N, n):

"""Returns one pair of simulated values of T_1 and T_2

based on the same simple random sample"""

tanks = np.arange(1, N+1)

sample = np.random.choice(tanks, size=n, replace=False)

t1 = 2*np.mean(sample) - 1

t2 = max(sample)*(n+1)/n - 1

return [t1, t2]

def compare_T1_T2(N, n, repetitions):

"""Returns a table of simulated values of T_1 and T_2,

with the number of rows = repetitions

and each row containing the two estimates based on the same simple random sample"""

tbl = Table(['T_1 = 2*Mean-1', 'T_2 = Augmented Max'])

for i in range(repetitions):

tbl.append(simulate_T1_T2(N, n))

return tbl

N = 300

n = 30

repetitions = 5000

comparison = compare_T1_T2(N, n, 5000)

comparison.hist(bins=np.arange(N/2, 3*N/2))

plt.title('$N =$'+str(N)+', $n =$'+str(n)+' ('+str(repetitions)+' repetitions)');

We know that both estimators are unbiased: . But is clear from the simulation that and hence is a better estimator than .

The empirical values of the two means and standard deviations based on this simulation are calculated below.

t1 = comparison.column(0)

np.mean(t1), np.std(t1)(299.95684000000006, 29.99859216498445)t2 = comparison.column(1)

np.mean(t2), np.std(t2)(299.9418, 9.258092549164154)These standard deviations are calculated based on empirical data given a specified value of the parameter and a specified sample size . In the next chapter we will develop properties of the SD that will allow us to obtain algebraic expressions for and for all and .